Gen AI video smackdown

Open AI just announced Sora, their first text-to-video generation AI model. And, in one fell swoop it seems like they’ve outdone all the competition. Check out their gallery page to see what we mean, because the snippets below don’t do it justice.

Sora, the new benchmark?

Given Sora emerging on the scene, we thought it would be a good opportunity to do a whistle-stop tour of the AI video generation landscape to see and compare what’s out there. For the purpose of this exercise, we’ll use one of the Sora videos as a baseline for assessing other video generation platforms. The video we’ll use for comparison purposes, is the one of the cute golden retriever puppies, driven by this prompt:

“A litter of golden retriever puppies playing in the snow. Their heads pop out of the snow, covered in snow.”

Whilst we don’t have access to generate videos using Sora, we can ascertain a few things from the examples and information provided by OpenAI:

Quality of output: 4.5/5 - whilst not perfect, it’s pretty close to the real deal!

Adherence to prompt: 4.5/5 - in almost all examples the prompts included details about the subject, foreground, background and even camera motion - in all cases the outputs seemed to follow the instructions with a great degree of accuracy.

Control: 3/5 - whilst the model seems to follow prompt guidance, there is no mention of any additional controls to direct the outputs.

Duration: 4.5/5 - Sora will allow up to 60 seconds of video to be created, this is far more than other video creation tools, which are limited to around 4 seconds.

Overall, we’re super impressed with Sora and can’t wait to get access to use it. But, what about the others?

RunwayML

Before Sora, RunwayML was considered by many to be leading the way with video generation, with their Gen-2 (in some ways it’s still the leading, as Sora isn’t officially released, yet).

By way of comparison, providing RunwayML with the exact same Labrador prompt, creates an underwhelming result. With just 4 seconds of video (Runways default) and a lack of any real motion (just some snow falling), it’s adherence to the prompt is far from good, note no heads “popping out of the snow” as requested in the prompt.

With a bit of tweaking to the prompt, and requesting to “Extend” the video by an additional 4 seconds, the result can be mildly improved, but still no heads popping out of the snow.

From:

“A litter of golden retriever puppies playing in the snow. Their heads pop out of the snow, covered in snow.”

To:

“A litter of golden retriever puppies playing in the snow, with their heads popping out of the snow, covered in snow.”

One area where RunwayML seems ahead of Sora is motion control and Motion Brush. This allows you to start with a source image (in the example below the image is from Midjourney) and define areas and types of motion. Think of it like guiding the AI.

But as you can see the results are still far from perfect.

Quality of output: 3.5/5 - quality is still very recognisable as AI generated

Adherence to prompt: 2.5/5 - once you start adding more than one or two elements to the prompt, RunwayML starts to ignore your requests.

Control: 4.5/5 - Motion Brush, pan, tilt, zoom … a lot of options to control the video output.

Duration: 2/5 - Limited to 4 seconds (with option to extend by another 4 seconds)

Leonardo.ai

Who doesn’t love an Aussie generative AI startup! Leonardo is a great tool, which does much more than video - image generation, many styles, easy interface. For this experiment through, we’re only assessing it’s video capability.

Firstly, Leonardo doesn’t do text-to-video yet, only supporting image-to-video. So, we used Leonardo to create the starting image, which it did a good job of, and then asked it to turn the image into video, which it didn’t do a great job of. Result below.

Quality of output: 2/5 - Quality is not only recognisable as AI generated but it distorts quickly (look at the top right puppy)

Adherence to prompt: 0/5 - Leonardo doesn’t allow text to video, so you cannot prompt it as such.

Control: 1/5 - No real option to control the video besides a “motion strength” slider.

Duration: 1.5/5 - Limited to 4 seconds

Pika Labs

Like Leonardo, Pika does a lot more than video, but here we’re assessing it only on it’s video capability. Using the same prompt, this is the result.

Quality of output: 1.5/5 - Quality is recognisable as AI generated and is just generally off (I tried multiple versions with equally disappointing results).

Adherence to prompt: 1/5 - Pika does support text to video prompting, but the adherence is very weak

Control: 2.5/5 - Options to pan, tilt, zoom, but no real degree of control.

Duration: 1/5 - Limited to 3 to 4 seconds, very very short

Stable Video Diffusion XT

The final model we’re comparing against is Stable Video Diffusion XT, from Stability.AI. We love that Stability is pushing the open source scene ahead and that is one of it’s biggest benefits. SVD doesn’t do text-to-video, only supporting image-to-video. So, we used Midjourney to create the starting image, and handed that to SVD to animate. Results below.

Quality of output: 0.5/5 - Just some snow animated, jerky movement and no movement to the puppies. Disappointing.

Adherence to prompt: 0.5/5 - No ability to prompt

Control: 1/5 - No real control options, just some basic parameter settings (e.g. fps).

Duration: 0.5/5 - With barely a second SVD produces the shortest outputs.

Summary

A table with a quick summary of our findings of the various AI video generation tools, with Sora’s amazing puppies taking a clear lead on all measurements.

A word about workflows

While this exercise looked at individual AI video generation tools, emerging AI artists are combining multiple AI tools, to achieve higher quality video outputs. Tools like Midjourney to create consistent high quality static shots which are then turned into motion using a video AI tool. Elevenlabs to add sound effects and voiceovers. Topaz to upscale and add cinematic quality to the videos. And a number of music generation tools like Soundraw.io and Google MusicLM. Check out Runway Studios to get a sense of what’s possible using these new AI powered workflows.

Don’t write anybody off yet!

Before you write off all but Sora on the video generation side of things, it’s worth remember how fast image generation models moved in the space of just a couple of years, when considering where video generation is going. Nothing demonstrates this better than Midjourney improvements from version to version, shown below (same prompt using the different versions of Midjourney).

Seeing the progress made by image models like Midjourney in just 2 years, together with the recently released Sora, should leave little doubt about how far things will go over the next few months / years in the video generation space. Whilst Sora is certainly impressive, they won’t be the only ones disrupting the video generation landscape.

What about the laws?

In the US they recently made robocalls (calls made by an AI) illegal. Time Under Tension Collective member recently wrote a great article highlighting the need for Australia to start adopting laws around the use of generated content. There is a clear need to help protect citizens against what is sure to be a tide of fake news, deep fakes, and new age scams.

In the meantime, responsibility for deploying AI responsibly is sitting with organisations who are playing, exploring and adopting AI technology.

At Time Under Tension we recently launched our Responsible AI Charter, which has been based on Australia’s AI Ethics Framework.

“We are committed to seeing AI used in a responsible, sustainable and ethical way, to advance humanity safely.”

What’s coming up

We love seeing how fast video generation is progressing and can soon see when the impossible will become very possible. Some thoughts on what’s coming;

Fine tuning of video models - being able to use your own images / video to train video models, similar to some of the image models available today. For brands as an example, this could open up a world of product video creation from single prompts.

Real time rendering of video - whilst the compute power needed to generate a video today is vast, we can expect this to significantly reduce with time. And as it reduces, real time rendering of generated video will be a likely reality, which opens up a whole world of potential for video games.

Hollywood in trouble - with the recent writers / actors strikes in Hollywood, it’s clear that the day of their concerns becoming reality are nearly here. Hollywood movies on the go are not an unrealistic mid-term future.

Work with Time Under Tension

We elevate Customer and Employee Experiences with generative AI. Our advisory team help you to understand what is possible, and how it relates to your business. We provide training for you to get the most of generative AI apps such as ChatGPT and Midjourney. Our technical team build bespoke tools to meet your needs. You can find us here www.timeundertension.ai/contact

A handful of Gen AI news

Here are five of the most interesting things we have seen and read in the last week;

Google have been hard at work releasing different Gemini models, the most impressive of which (Gemini Pro 1.5) has a 1,000,000 token context window, meaning it can analyse vast amounts of content (in their demo you can see Gemini analysing an entire movie uploaded to it). By comparison, ChatGPT 4.0 has a 128,000 token context window

Ever wondered what conversational podcasts look like? Dexa uses the power of AI to “explore the knowledge of trusted experts, that was previously locked in podcasts” (and just raised $6m of capital too).

LA based Creative Director Alistair Green created a fake Land Rover commercial, using Midjourney and Stable Video Diffusion.

Whilst not strictly generative AI, the Vesuvius Challenge was too good to not mention here, showcasing how AI is being used to read and decipher 2000-year-old scrolls which cannot be opened as they are highly carbonised and ashen.

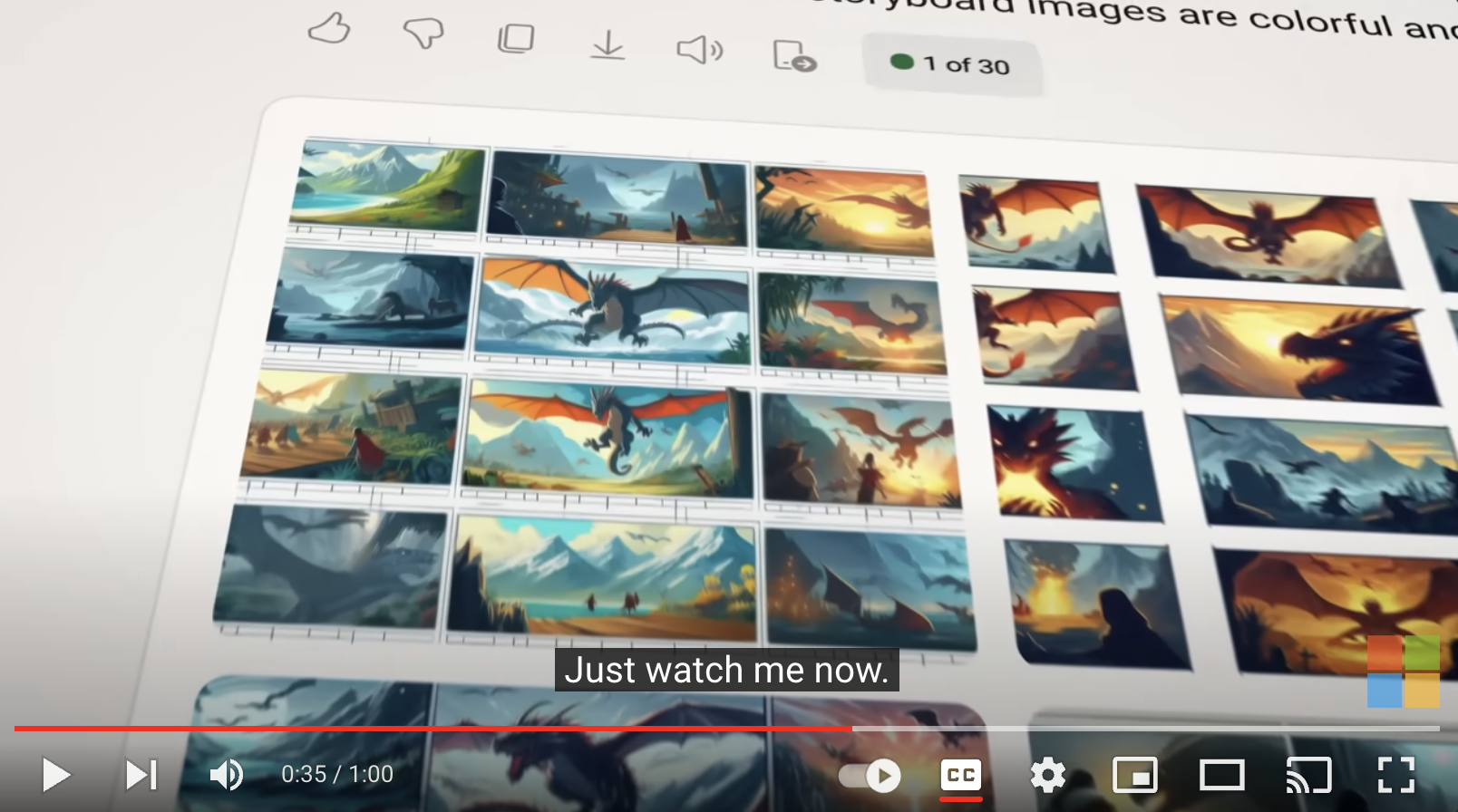

Microsofts Super Bowl’s commercial for Copilot gives a glimpse into how the big companies see us adopting AI, to create movies, write games, teach us and do branding